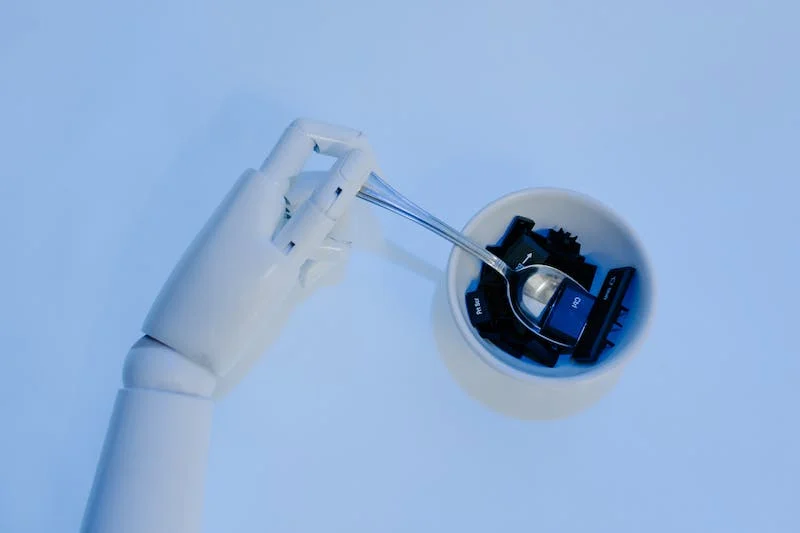

In recent years, artificial intelligence has leapt from research labs into the center of industry and society. From generative models capable of writing code and creating art, to large-scale systems powering autonomous vehicles and logistics, AI has become both indispensable and power-hungry. Yet as demand grows, a fundamental problem is emerging: today’s computing infrastructure is straining under the weight of AI workloads. Enter neuromorphic and edge AI hardware—two converging trends that aim to reimagine how machines compute, learn, and interact with the physical world.

Why Traditional Chips Are Reaching Their Limits

Modern AI, particularly deep learning, thrives on vast parallelism—running trillions of operations to adjust billions of parameters. Current processors, such as GPUs and TPUs, are designed for this type of work, but even they are reaching limits. Training state-of-the-art models can consume megawatt-hours of electricity, costing millions of dollars and emitting substantial carbon footprints. Meanwhile, as AI expands into everyday devices—from smartphones to drones—there is a growing need for powerful but lightweight compute at the edge, where power and bandwidth are limited.

For a deeper dive into the challenges of AI hardware, see MIT Technology Review on AI chips.

Neuromorphic Computing: Mimicking the Brain

Neuromorphic computing draws direct inspiration from the human brain. Instead of processing information sequentially like a CPU, or in massive but rigid parallel arrays like a GPU, neuromorphic chips simulate the way biological neurons and synapses fire and communicate. They operate with spikes of activity, transmit information sparsely, and adapt dynamically. The result is a computational paradigm that can, in principle, achieve orders of magnitude more energy efficiency.

One of the best-known projects in this space is Intel’s Loihi, a neuromorphic research chip that uses asynchronous spiking neural networks to perform AI tasks. IBM has developed TrueNorth, another chip built to emulate one million neurons. Other efforts, including academic and startup initiatives, are pushing toward architectures that combine memory and compute—overcoming the so-called von Neumann bottleneck, in which separate memory and processing units waste energy by shuttling data back and forth. More information is available via Intel’s neuromorphic research page.

Edge AI Hardware: Computing Close to the Source

Parallel to neuromorphic efforts, edge AI hardware is gaining traction as a practical response to data-intensive AI applications. Edge computing moves processing closer to where data is generated—whether in a factory sensor, a medical device, or an autonomous drone. Instead of sending streams of raw data back to the cloud, devices can process information locally, reducing latency, improving privacy, and lowering bandwidth costs.

Examples of edge AI hardware include Google’s Edge TPU, NVIDIA’s Jetson platform, and specialized chips from startups like Mythic and Syntiant. For developers interested in practical implementation, check out Google’s Edge TPU documentation.

Convergence: Neuromorphic Meets Edge

While neuromorphic computing and edge AI may seem like separate paths, they share common goals—energy efficiency, low latency, and adaptability. Neuromorphic hardware could become a natural fit for edge devices, where power budgets are tight and workloads are dynamic. Imagine a swarm of drones using neuromorphic chips to process vision and navigation tasks, coordinating in real-time without overloading central servers. Or consider smart prosthetics with neuromorphic circuits that respond as quickly and flexibly as biological nerves.

Challenges Ahead

Despite its promise, neuromorphic and edge AI hardware faces formidable hurdles.

- Fabrication Complexity: Building chips that integrate memory, processing, and neural-like architectures is technically difficult and costly.

- Programming Paradigms: Neuromorphic systems require new algorithms and software frameworks.

- Standardization: Without shared benchmarks and platforms, it is difficult to compare performance across different neuromorphic designs.

- Thermal and Power Constraints: Even at the edge, managing heat and power is a central challenge.

Learn more about the global semiconductor industry from Semiconductor Industry Association (SIA).

Who Is Investing?

Governments and industry are both heavily involved. The U.S. Defense Advanced Research Projects Agency (DARPA) has funded neuromorphic research for years under its SyNAPSE program. Companies like Intel, IBM, and Qualcomm continue to invest in experimental chips. In Europe, the Human Brain Project has supported neuromorphic initiatives like SpiNNaker and BrainScaleS. Meanwhile, edge AI hardware has become a commercial battleground, with NVIDIA, Google, and Apple integrating custom AI accelerators into consumer devices.

Startups are also innovating aggressively. Companies such as BrainChip Holdings have developed commercial neuromorphic processors for applications ranging from cybersecurity to autonomous systems. Others, like Mythic, focus on analog compute-in-memory designs for ultra-efficient edge inference.

Internal Resource: Related Articles

Conclusion

The quest to build brain-inspired hardware and deploy AI at the edge reflects a broader truth: computing must evolve to meet the demands of the future. Traditional architectures are not enough. Whether through spiking neural networks, compute-in-memory devices, or low-power edge accelerators, the next generation of AI hardware is likely to look—and act—much more like the biological systems it seeks to emulate.

As researchers and engineers push forward, the hope is that we can capture some of the brain’s remarkable efficiency, adaptability, and intelligence in silicon. If successful, neuromorphic and edge AI hardware could form the foundation of a new era in computing—one that is as transformative as the shift from mainframes to personal computers, or from desktops to mobile.